I want to quickly bring everyone up to date on the subject. First, the facts:

- Humans are complex machines.

- Our minds are complex computers running complex programs.

This isn't really open to debate any longer and the only valid philosophical viewpoint is the one that takes the above into the account. To get comfortable with these ideas it helps to have at least some idea about molecular biology and the neurophysiology. There is nothing mysterious at the basic level down there - the complexity is in the interactions.

Our life is essentially just proteins being built according to a large collection of "IF THEN" instructions written in our DNA. Our metabolism and our behaviour is just a long sequence of chemical equations going on in our bodies. When we move a finger, this happens because an electro-chemical signal came to the muscle cells from a particular brain neuron. The interactions between neurons is just proteins being synthesized, randomly moved around, being stuck with other proteins, reacting or not reacting, etc. There are no mysterious quantum effects, it's just very complex chemistry.

To come to grips with this it helps to know that there are already some simple viruses that we understand down to the smallest detail (down to the atom). When you get to the bottom of things, there is no soul or anything, it's just atoms. It also helps to know a bit about the neurophysiology (read Mapping the Mind by Rita Carter) to start to realise that all our behavior is programmed.

Now that we have the facts, here is the conclusion:

We can simulate the brain or the whole human organism and the simulation will run just as well as the real thing. The implementation is irrelevant, the interactions of two hydrogen atoms in two proteins do not possess any special significance. What matters is the program, because the program is all there is. The underlying computing substrate can be anything.

Some people may be uncomfortable with that, but there is simply no evidence against that. We already can simulate neurons and as far as we know the simulations work just as well as real neurons. There are also reasons to think that our simulation doesn't need to be accurate down to the single neuron, because our brain evolved to be resistant to the loss of neurons.

This is also important - our brains have no grand design, they are just highly evolved structures of organic molecules. It's not hand-written software code, where changing one bit can destroy a program - it's a genetically (in both senses) evolved mess that was functional from the very beginning when it was just a single neuron 3 billion years ago or so. Our brain has evolved to have parts added or removed, we can't break it by carefully tweaking and replacing real neurons with simulated ones is not going to break everything (once we get over a certain accuracy threshold).

With the feasibility of uploading covered, let's get to the mechanics.

The simpliest "brute-force"

There is also an inefficient method, which is good for one thing - to persuade stubborn uninformed humans that uploading is indeed possible. The method is typically used in (perhaps not surprisingly) thought experiments. :) It works like this: you take one neuron and simulate it down to the single atomic interaction. You then replace the real neuron with the chemo-electro-mechanical device that runs the simulation or gets the results of the simulation from the outside computer. You connect that artificial neuron with other neurons properly. Is the person whose brain we are working on the same person? Obviously yes, because even if that artificial neuron went haywire, the brain could handle it - it has been know to handle an iron rod going right through it. But since the artificial neuron works just as well, the brain would work just as well. Now we replace all neurons one by one with the artificial ones. Does that person stop being himself? Obviously not, because there are no important functional changes (all neurons still work the same way) and there is no discontinuity. Of course, I am jumping over lots of steps here (most authors spend pages dwelling on this possibility), but I hope you get the idea. In the end we essentially have a computer (or a bunch of computers) running the mind and the person is still himself.

By the way, all the "controversial" questions of is the copy still you are rubbish. The copy is a copy, which in case of software is indistinguishable from the original. These questions are nonsensical. People in the future would be happily forking themselves and happily destroy the copies, once they've done their job, sometimes incorporating parts of copies into the main process. Our notions of human/mind/individuality/etc will have to accomodate the future fractal world. There won't be neither only one you, nor several of you, there will be a non-integer quantity of you that is constantly changing depending on the needs of the moment.

But the big thing here is that we aren't just replacing the neurons or inserting chips, we are removing the mind from its substrate. It no longer is location- and substrate-dependent. You can have that optical upgrade run on a PDA or on the Internet in a distributed way. You can have that perfect memory backed up online on a distributed network or on a server in secure location. This way you already have "partial uploads". This is what complexity is about. People will not keep their brain in a jar, they will not keep it on their head. Parts of people will run in different ways. People may have partly robotic bodies, with some of the intelligence (required to operate them) will run on computers in those bodies. People will have parts of their personalities still in parts of their original brain and some parts of them will be running in microchips. These people will not be facing "total conversion", they will just be normal people adapting to new opportunities. There won't be a single right way to upload, this will happen gradually and the process will be shaped by free choices, by market forces and by brain health research.

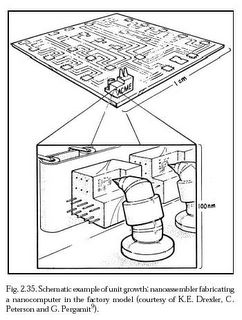

After we are running these partial (or full) uploads for some time, we will get mature advanced nanotech — i.e. very small (not just built on nano level, but designed on the nano level, i.e. every atom is in its optimal place and nothing is wasted in design) and self-replicating nanobots. At that point the favourite uploading procedure would be to get a nanobot to each neuron and then either 1) study the neuron, consume it and stay in its place, acting just like it or 2) study the neuron and send the data to the central computer. If the first way is used, we get a nanotech brain that can effortlessly be converted to an uploaded mind (the difference being that uploaded brain doesn't have to run inside the skull - it can be distributed). If the second brain is used, you have the brain intact and can either destroy it or keep running (then you get two persons, which wouldn't really be a problem, as I told before).

With all that flexibility the question of substrate will become pretty meaningless. With computing power as cheap (essentially free and unlimited) as it will be then, our minds will run wherether it is optimal and we won't care that much about the choice. We won't be a civilization of brains in vats, we will be totally ok with being "executed" anywhere, even more comfortable than we are with moving around in the meatspace. We will also be fine about parts of our minds not being neural simulations, but software written in FORTRAN. :) Since we are just programs not only it doesn't matter what they are ran on, but also how exactly they are written. Those parts of code (such as our susceptibility to optical illusions) that aren't perfect can be rewritten. Of course, where you can rewrite one bit, you can rewrite anything. And this will totally eliminate the distinction between AIs and humans, because we will all be part AIs and the actual percentage of our humanness will become irrelevant. Now, it's actually possible that strong (human-level) AI will become possible before full uploading is possible. It is also possible that it's simplier to design strong AI from scratch, without basing it on human minds. In that case the Singularity (see below) may actually happen (not sure how likely it is) before full uploading (which may complicate things).

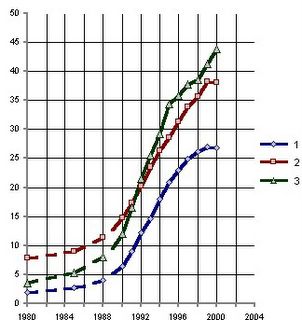

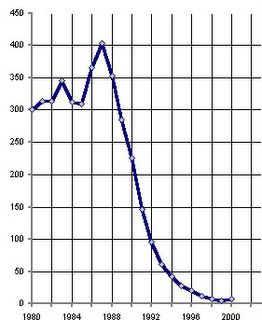

Now a bit about when this is going to happen. The key factors here is 1) our ability to run the simulation and 2) the ability to scan the brain structure. Ray Kurzweil wrote about that in details, with extrapolations and explanations. The main important point is that our ability to scan is rapidly improving, our ability to manufacture small objects is improving (we are moving towards nanotech) and the speed of our computers is improving as well. There are really no chances that this progress will stop or will noticeably slow down. Nanotech and fast enough computers will come around 2020-2030. Of course, it can happen sooner or faster - we can't be 100% sure about the date until it actually arrives, but all evidence points to about that time. But it's pretty certain that it will happen before 2100. And it probably won't happen until at least 2015.

Now what will this all lead to. Of course, the society will change a lot. How exactly will it change is a very big topic and we still haven't figured it out. One thing is certain - the change will be radical. The biggest consequence of uploading (or strong AI, if it happens earlier) is the ability to accelerate the progress by 1) is the ability to increase speed and scale of thinking (you can make the uploaded mind or AI perform faster just by adding hardware). This leads to a rapid increase in the number of thought/second in the world, which accelerates research (by that time, obviously, all experiements will be easier to do in simulation as well, so you aren't tied to reality in any way). This will allow to quickly invent better nanotech and faster computers, which in turn leads to faster thinking. And it just so happens that the laws of physics allow for computers that are many orders of magnitude more powerful (per unit of volume) than the human brain. And we will be able to get to that point very quickly, because the faster we think the faster we invent even faster computers and the faster we think. We can become millions of millions of times "smarter" (though it may be not entirely correct to assume that smartness is linearly dependent on thinking speed) and we may get there in just a few years.

This even is called TechnologicalSingularity and it's a very big deal. In fact, you are almost certainly unable to fully comprehend how profoundly hugely important that event is. You should read Staring into the Singularity to even be able to begin to start to comprehend it.

Well, I hope you are impressed. And remember, this is going to happen relatively soon. Better tell everyone.

Edit this page at Futures wiki.